Technology and its ever evolving wonders never fail to amaze us. But, it's all fun and games until its applications start brewing malicious consequences. The unregulated use of ‘deepfakes’ in recent times, especially in the milieu of general elections in India stirred the hornet’s nest. From projecting popular Bollywood actors rallying behind the Opposition front to morphing the Prime Minister’s voice in several media bites, the internet is rife with false and misleading videos of prominent faces. In today’s times where post-truths play a pivotal role in shaping public sentiments, these engineered content pieces have the potential to severely impair the democratic processes of elections.

What is all the more alarming is that not only are the deepfakes precariously convincing, making it impossible for the common eye to tell the fake apart from the real but also regulations around deepfakes in India remain largely uncharted, thus paving the way for the miscreants to escape liability while continuing to mislead and confound.

What are these deepfakes that’ve gotten the Prime Minister worried?

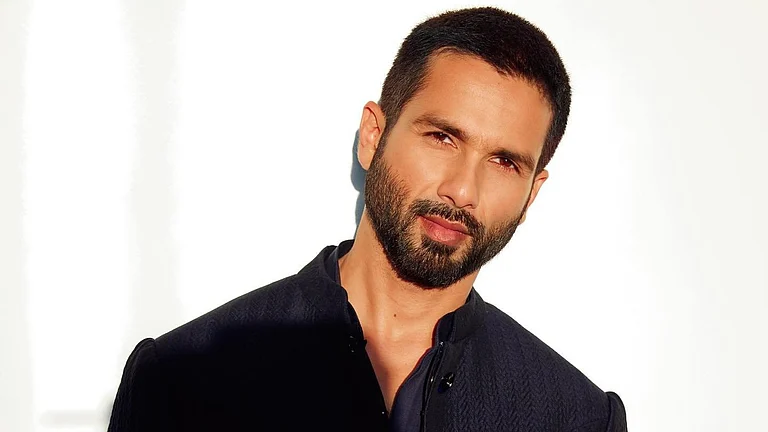

Deepfakes effectively are videos and images created using the human image synthesis technology through artificial intelligence which portrays simulations of real life situations involving individuals, often celebrities and eminent personalities. In our world of filters and memes, images of top leaders in athleisure or the prime minister rapping a popular number are palpably for the gags. But, not all such ‘inventions’ are fulcrummed on humour. Remember, Rashmika Mandanna’s elevator deepfake video a few months back or the recent video of the Home Minister claiming that the BJP will end the reservation system? Now these fairly plausible video snippets are extremely insidious and can have serious ramifications on the tides of the elections.

Now these novel outputs aren’t the ones to be straightjacketed. After all, creativity and all stretches of its imagination are sheltered under the right to freedom of speech and expression that Article 19 of the Indian Constitution grants us all. However, these aren’t absolute in nature, being subjected to reasonable restrictions. But, the existing legal rubric hasn’t really kept pace with the changing technology to balance the mitigation of harm without impeding on the rights. Here’s where the ambiguity in the regulatory provisions become a double edged sword.

Need for legal limitations to technology: Taming the shrew without clamping down on the voice of democracy

The growing menace of deepfakes has indeed further jeopardised the already shaky premise of digital laws in India. But India’s response to facilitate legal restrictions on deepfakes has been at best knee jerk. In December 2023, in the wake of Mandanna’s deepfake video leak, the President and the Prime Minister had both cautioned against the perils of deepfake and the Ministry of Electronics and Information Technology (Meity) hinted at drafting a legislation to tackle the issue within 10 days. Almost 6 months down the line, no legislation whatsoever seems to be on the horizon. All we have is an advisory from the government, released on December 26, 2023 merely emphasising on all internet intermediaries to conduct stricter compliance to the due diligence requirements under the Information Technology Act, 2000 and the allied rules.

The nature of the crime linked with deepfakes can be brought under the fold of already enlisted offences of ‘impersonation’, forgery or fraud but deepfakes are far more sophisticated and dangerous so the existing legal framework largely falls short of exerting the necessary curbs.

In the current context, the “Intermediary Rules” of 2021 (“Rules”) is the closest to a legal embargo to deepfakes we have. However, just as the name suggests, the ball is largely pushed to the court of the intermediaries. The crux of the Rules is the exemption from liability that the intermediaries get in the event of problematic content on their platforms if they comply with the obligations laid on them by the law. Now these compliances are over-broad. For instance, Rule 3(1)(b) merely states that intermediaries must make “reasonable efforts” to prohibit misleading content. Ironically, the very Rules also lay down punishments under Rule 3(1)(b)(v) for “knowingly or intentionally” promoting content which are “patently false, untrue or misleading in nature”. The ambiguity in the phrasing afflicts both ends of the spectrum.

Imagine this in the context of those annoying but innocuous forwards, the elders of our families indulge in. Novices to this overwhelmingly new technology, our parents and uncles and aunts are far from being equipped to detect deepfakes in their social media accounts. So, who’s to decide whether they are guilty of intentionally propagating misleading information? For the intermediaries then to come down on them arbitrarily would be a serious violation of their freedom of speech and expression. On the other hand, the vague threshold of reasonable efforts for intermediaries to take action against misinformation sets the stage for lapses, both intentional and otherwise. In Mandanna’s case, the law enforcement authorities had to wait for over three months to record Meta’s action against the widely circulated video.

Trapezing the burden of accountability between law enforcement and private parties has only enfeebled all counter efforts against deepfakes. With its potential to manipulate even the elections, deepfakes are no longer a threat to the individuals but the collective and no hurried, piecemeal steps by way of legal action can adequately curb it. The need for a well codified and comprehensive law against deepfakes is more resounding now than ever.

Yashaswini Basu is Nyaaya’s Outreach Lead, managing strategic partnerships and collaborative content